Monday, June 28, 2021

Types of Graph Analysis

Monday, June 21, 2021

Fintech Use Case for Graph Analytics

In my previous blog, I had written about high-level use cases for Graph Analytics. In today’s blog, lets' dive in deeper and take a look at how Fintech companies can use graph analytics to allocate credit to customers & manage risks.

Today, banks and other Fintech companies have access to tonnes of information – but their current databases and IT solutions do not have the ability to make the best use of it. Customer information such as KYC data, and other demographic data are often storied in traditional RDBMS database, while transactional data is stored in a separate database, customer interactions on web/mobile apps, customer interactions data are stored in Big Data HDFS stores, while the data from Social network or other network data about customers are often not even used.

This is where graph databases such as Neo4J or Oracle Autonomous database etc come into play.

A graph database can connect the dots between different sources of information and one can build a really cool, intelligent AI solution to make predictions on future purchases, credit needs, and risks. Prediction data can then be validated with actual transactional data to iterate and build better models.

Graph databases are essentially built for high scalability and performance. There are several open-source algorithms and libraries that can detect components and make connections within the data, you can evaluate these connections and start making predictions, which over time will only get better.

Wednesday, June 16, 2021

BFSI Use cases for Graph Analytics

Graph analytics is used to analyze relations

among entities such as customers, products, operations, and devices. Businesses

run on these relationships between customers, customers to products,

how/where/when customer’s use products, and how business operations affect the

relationships. In a nutshell, it’s like analyzing social networks and financial

companies can gain immensely by using Graph Analytics.

Let’s see the four biggest use case of Graph

Analytics in the world of finance.

- Stay

Agile in Risk & Compliance

Financial services firms today face increased regulations when it comes to risk and compliance reporting. Rather than update data manually across silos, today's leading financial organizations use Neo4j to unite data silos into a federated metadata model, enabling them to trace and analyze their data across the enterprise as well as update their models in real-time. - Fraud

Protection

Dirty money is passed around to blend it with legitimate funds and then turned into hard assets. Detect circular money transfers to prevent money laundering via money mules. Graph Analytics discovers the network of individuals or common patterns of transfers in real-time to prevent common frauds – to detect illegal ATM transactions. Data like IP addresses, cards used, branch locations, the timing of transfers can be instantly tied to individuals to prevent fraudulent transactions. - Leverage

data across teams

Data is the lifeblood of finance. Companies strive to actively collect, store and use data. At the same time, financial companies are governed by laws, regulations, and standards around data. The burden of being compliant and ensuring data privacy has become ever more complex and expensive.

Graph Analytics allows tracking data lineage through the data lifecycle. Data can be tracked and navigated, vertex by vertex, by following the edges. With graph analytics, it is possible to follow the path and find where the information originated, where it was copied, and where it was utilized. This makes it easier to remain compliant and use data for its full value. - Capture

360-degree view of customers

Marketing is all about understanding relationships of their customers and their products. Knowing the relationships between customers, customers’ transactions, and products will build a 360-degree view of customers – which can be used for better marketing and more effectively provide customers with what they want.

Friday, April 10, 2020

Friday, August 17, 2018

4 Types of Data Analytics

Data analytics can be classified into 4 types based on complexity & Value. In general, most valuable analytics are also the most complex.

1. Descriptive analytics

Descriptive analytics answers the question: What is happening now?

For example, in IT management, it tells how many applications are running in that instant of time and how well those application are working. Tools such as Cisco AppDynamics, Solarwinds NPM etc., collect huge volumes of data and analyzes and presents it in easy to read & understand format.

Descriptive analytics compiles raw data from multiple data sources to give valuable insights into what is happening & what happened in the past. However, this analytics does not what is going wrong or even explain why, but his helps trained managers and engineers to understand current situation.

2. Diagnostic analytics

Diagnostic Analytics uses real time data and historical data to automatically deduce what has gone wrong and why? Typically, diagnostic analysis is used for root cause analysis to understand why things have gone wrong.

Large amounts of data is used to find dependencies, relationships and to identify patterns to give a deep insight into a particular problem. For example, Dell - EMC Service Assurance Suite can provide fully automated root cause analysis of IT infrastructure. This helps IT organizations to rapidly troubleshoot issues & minimize downtimes.

3. Predictive analytics

Predictive analytics tells what is likely to happen next.

It uses all the historical data to identify definite pattern of events to predict what will happen next. Descriptive and diagnostic analytics are used to detect tendencies, clusters and exceptions, and predictive analytics us built on top to predict future trends.

Advanced algorithms such as forecasting models are used to predict. It is essential to understand that forecasting is just an estimate, the accuracy of which highly depends on data quality and stability of the situation, so it requires a careful treatment and continuous optimization.

For example, HPE Infosight can predict what can happen to IT systems, based on current & historical data. This helps IT companies to manage their IT infrastructure to prevent any future disruptions.

4. Prescriptive analytics

Prescriptive analytics is used to literally prescribe what action to take when a problem occurs.

It uses a vast data sets and intelligence to analyze the outcome of the possible action and then select the best option. This state-of-the-art type of data analytics requires not only historical data, but also external information from human experts (also called as Expert systems) in its algorithms to choose the bast possible decision.

Prescriptive analytics uses sophisticated tools and technologies, like machine learning, business rules and algorithms, which makes it sophisticated to implement and manage.

For example, IBM Runbook Automation tools helps IT Operations teams to simplify and automate repetitive tasks. Runbooks are typically created by technical writers working for top tier managed service providers. They include procedures for every anticipated scenario, and generally use step-by-step decision trees to determine the effective response to a particular scenario.

Friday, July 06, 2018

5 AI uses in Banks Today

1. Fraud Detection

Artificial intelligence tools improve defense against fraudsters and allowing banks to increase efficiency, reduce headcount in compliance and provide a better customer experience.

For example, if a huge transaction is initiated from an account with an history of minimal transactions – AI can shop the transactions until it is verified by a human.

2. Chatbots

Intelligent chatbots can engage users and improve customer service. AI chatbot brings a human touch, have human voice nuances and even understand the context of the conversation.

Recently Google demonstrated its AI chatbot that could make table reservation at a restaurant.

3. Marketing & Support

AI tools have the ability to analyze past behavior to optimize future campaign. By learning from prospect’s past behavior, AI tools automatically select & place ads or collateral for digital marketing. This helps craft directed marketing campaigns

Also see: https://www.techaspect.com/the-ai-revolution-marketing-automation-ebook-techaspect/

4. Risk Management

Real time transactions data analysis when used with AI tools can identify potential risks in offering credit. Today, banks have access to lots of transactional data – via open banking, and this data needs to be analyzed to understand micro activities and access the behavior of parties to correctly identify risks. Say for example, if the customer has borrowed money from a large number of other banks in recent times.

5. Algorithmic Trading

AI takes data analytics to the next level. Getting real time market data/news from live feeds such as Thomson Reuters Enterprise Platform, Bloomberg Terminal etc., and AI tools can use this information to understand investor sentiments and take real-time decisions on trading. This eliminates the time gap between insights & action.

Wednesday, July 04, 2018

Top Challenges Facing AI Projects in Legacy Companies

Companies relutcantly start few AI projects - only to abandon them.

Here are are the top 7 challenges AI projects face in legacy companies:

1. Management Reluctance

Fear of Exacerbating asymmetrical power of AI

Need to Protect their domains

Pressure to maintain statusquo

2. Ensuring Corporate Accountability

Internal Fissures

Legacy Processes hinder accountability on AI systems

3. Copyrights & Legal Compliance

- Inability to agree on data copyrights

- Legacy Processes hinder compliance when new AI systems are implemented

4. Lack of Strategic Vision

- Top management lacksstrategic vision on AI

- Leaders are unaware of AI's potential

- AI projects are not fully funded

5. Data Authenticity

- Lack of tools to verify data Authenticity

- Multiple data sources

- Duplicate Data

- Incomplete Data

6. Understanding Unstructured Data

- Lack of tools to analyze Unstructured data

- Middle management does not understand value of information in unstructured data

- Incomplete data for AI tools

7. Data Availability

- Lack of tools to consolidate data

- Lack of knowledge on sources of data

- Legacy systems that prevent data sharing

Tuesday, June 19, 2018

How Machine Learning Aids New Software Product Development

Developing new software products has always been a challenge. The traditional product management processes for developing new products takes lot more time/resources and cannot meet needs of all users. With new Machine Learning tools and technologies, one can augment traditional product management with data analysis and automated learning systems and tests.

Traditional New Product Development process can be broken into 5 main steps:

1. Understand

2. Define

3. Ideate

4. Prototype

5. Test

In each of the five steps, one can use data analysis & ML techniques to accelerate the process and improve the outcomes. With Machine Learning, the new 5 step program becomes:

- Understand – Analyze:Understand User RequirementsAnalyze user needs from user data. In case of Web Apps, one can collect huge amounts of user data from Social networks, digital surveys, email campaigns, etc.

- Define – Synthesize: Defining user needs & user personas can be enhanced by synthesizing user's behavioral models based on data analysis.

- Ideate – Prioritize: Developing product ideas and prioritizing them becomes lot faster and more accurate with data analysis on customer preferences.

- Prototype – Tuning: Prototypes demonstrate basic functionality and these prototypes can be rapidly, automatically tuned to meet each customer needs. This aids in meeting needs of multiple customer segments.Machine Learning based Auto-tuning of software allows for rapid experimentation and data collected in this phase can help the next stage.

- Test – Validate: Prototypes are tested for user feedback. ML systems can receive feedback and analyze results for product validation and model validation. In addition, ML systems can auto-tune, auto configure products to better fit customer needs and re-test the prototypes.

Closing Thoughts

For a long time, product managers had to rely on their understanding of user needs. Real user data was difficult to collect and product managers had to rely on surveys and market analysis and other secondary sources for data. But in the digital world, one can collect vast volumes of data, and use data analysis tools and Machine learning to accelerate new software product development process and also improve success rates.

Monday, May 21, 2018

AI for IT Infrastructure Management

AI is being used today for IT Infrastructure management. IT infrastructure generates lots of telemetry data from sensors & software that can be used to observe and automate. As IT infrastructure grows in size and complexity, standard monitoring tools does not work well. That's when we need AI tools to manage IT infrastructure.

Like in any classical AI system, IT infrastructure management systems also has 5 standard steps:

1. Observe:

Typical IT systems collect billions of data sets from thousands of sensors, collecting data every 4-5 minutes. I/O pattern data is also collected in parallel and parsed for analysis.

2. Learn:

Telemetry data from each device is modeled along with its global connections, and system learns each device & application stable, active states, and learns unstable states. Abnormal behavior is identified by learning from I/O patterns & configurations of each device and application.

3. Predict:

AI engines learn to predict an issue based on pattern-matching algorithms. Even application performance can be modeled and predicted based on historical workload patterns and configurations

4. Recommend:

Based on predictive analytics, recommendations are be developed based on expert systems. Recommendations are based on what constitutes an ideal environment, or what is needed to improve the current condition

5. Automate:

IT automation is done via Run Book Automation tools – which runs on behalf of IT Administrators, and all details of event & automation results are entered into an IT Ticketing system

Friday, May 18, 2018

Popular AI Programming Tools

Thursday, May 17, 2018

How to select uses cases for AI automation

AI is rapidly growing and companies are actively looking at how to use AI in their organization and automate things to improve profitability.

Approaching the problem from business management perspective, the ideal areas to automate will be around the periphery of business operations where jobs are usually routine, repetitive but needs little human intelligence - like warehouse operators, metro train drivers etc., These jobs follow a set pattern and even if there is a mistake either by human operator or by a robot - the costs are very low.

Business operations tends to employ large number of people with minimum skills and use lots of safety systems to minimize costs of errors. It is these areas that are usually the low hanging fruits for automation with AI & robotics..

Developing an AI application is a lot more complex, but all apps have 4 basic steps:

1. Identify area for automation: Areas where automation solves a business problem & saves money

2. Identify data sources. Automation needs tones of data. So one needs to identify all possible sources of data and start collecting & organizing all the data

Once data is collected, AI applications can be developed. Today, there are several AI libraries and AI tools to develop new applications. My next blog talks about all the popular AI application development tools.

Once an AI tool to automate a business process is developed, it has to be deployed, monitored and checked for additional improvements - which should be part of regular business improvement program.

Monday, May 14, 2018

Popular Programming Languages for Data Analytics

I have listed down the most popular programming languages for data analysis.

Thursday, May 10, 2018

How AI Tools helps Banks

In the modern era of the digital economy, technological advancements in Machine Learning (ML) and Artificial Intelligence (AI) can help banking and financial services industry immensely.

AI & ML tools will become an integral part of how customers interact with banks and financial institutions. I have listed 8 areas where AI tools will have the greatest impact.

Tuesday, May 08, 2018

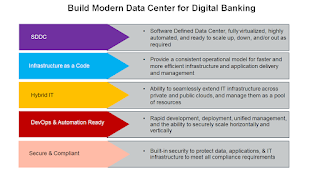

Build Modern Data Center for Digital Banking

Building a digital bank needs a modern data center. The dynamic nature of fintech and digital banking calls for a new data center which is highly dynamic, scalable, agile, highly available, and offers all compute, network, storage, and security services as a programmable object with unified management.

A modern data center enables banks to respond quickly to the dynamic needs of the business.

Rapid IT responsiveness is architected into the design of a modern infrastructure that abstracts traditional infrastructure silos into a cohesive virtualized, software defined environment that supports both legacy and cloud native applications and seamlessly extends across private and public clouds .

A modern data center can deliver infrastructure as code to application developers for even

faster provisioning both test & production deployment via rapid DevOps.

Modern IT infrastructure is built to deliver automation - to rapidly configure, provision, deploy, test, update, and decommission infrastructure and applications (Both legacy, Cloud native and micro services.

Modern IT infrastructure is built with security as a solid foundation to help protect data, applications, and infrastructure in ways that meet all compliance requirements, and also offer flexibility to rapidly respond to new security threats.

Friday, May 04, 2018

Key Technologies for Next Gen Banking

Digital Transformation is changing they way customers interact with banks. New digital technologies are fundamentally changing banks from being a branch-centric human interface driven to a digital centric, technology interface driven operations.

In next 10 years, I predict more than 90% of existing branches will close and people will migrate to digital banks. In this article, I have listed out 6 main technologies needed for next gen banking - aka the Digital Bank.

1. MobileMobile Apps is changing how customers are interacting with bank. What started as digital payment wallets, mobile banking has grown to offer most of the banking services: Investments, Account management, Lines of credit, International remittances etc., providing banking services anywhere, anytime!

2.Cloud & API

Mobile banking is built on cloud services such as Open API & Microservices. Open API allows banks to interact with customers and other banks faster. For example, Open API allows business ERP systems to directly access bank accounts and transfer funds as needed. Open API allows banks to interact faster, transfer funds from one back to another etc. In short Cloud technologies such as Open API and microservices are accelerating interactions between banks, and banks & customers, thus increasing the velocity of business.

3. Big Data & Analytics

Big data and analytics are changing the way banks reach out to customers, offer new services and create new opportunities. Today banks have tremendous access to data: Streaming data from websites, cloud services, mobile data and real time transaction data. All this data can be analyzed to identify new business opportunities - micro credit, Algorithmic trading etc.

Advanced analytical technologies such as AI & ML is increasingly being used to detect fraud, identify hidden customer needs and create new business opportunities for banks. Though these technologies are still in their early stages, it will get a faster adaption and become main stream in next 4-5 years.

Already, several banks are using AI tools for customer support activities such as chat, phone banking etc.

5. Biometrics & security.

As velocity of transactions increases, Security is becoming vital for financial services. Biometric based authentication, Stronger encryption, continuous real time security monitoring enhances security in a big way.

6. Block chain & IoT

IoT has become mainstream. Banks were early adapters of IoT technologies: POS devices, CCTV, ATM machines, etc. Block chain technology is used to validate IoT data from retail banking customers. This is helping banks better understand customers and tailor new offerings to create new business opportunities.

Thursday, May 03, 2018

Data Analytics for Competitive Advantage

Data Analytics is touted as 'THE" tool for competitive advantage.

In this article, I have done a break down of data analytics into its three main components and further listed down various activities that are done in each category.

Three Main Components of Data Analytics

1. Data Management

2. Standard Analytics

3. Advanced Analytics

Data Management

Data Management forms the foundation of data analytics. About 80% of efforts & costs are incurred in data management functions. The world of data management is vast and complex, it consists of several activities that needs to be done:

1. Data Architecture

2. Data Governance

3. Data Development

4. Data Security

4. Master Data Management

5. Metadata Management

6. Data Quality Management

7. Document & Content Management

8. Database & Data warehousing Operations

Standard Analytics

Advanced Analytics

Monday, April 30, 2018

Build State of Art AI Deep Learning Systems

HPE Apollo 6500 Gen10 System is an ideal HPC and deep learning platform providing unprecedented performance with industry leading GPUs, fast GPU interconnect, high bandwidth fabric and a configurable GPU topology to match your workloads. The ability of computers to autonomously learn, predict, and adapt using massive data sets is driving innovation and competitive advantage across many industries and applications are driving these requirements.

The system with rock-solid reliability, availability, and serviceability (RAS) features includes up to eight GPUs per server, NVLink 2.0 for fast (up to 300 GB/s) GPU-to-GPU communication, Intel® Xeon® Scalable processors support, choice of high-speed / low latency fabric, and is workload enhanced using flexible configuration capabilities. While aimed at deep learning workloads, AI models that would consume days or weeks can now be trained in a few hours or minutes.

HPE SDS Storage Solutions

HPE Solution for Intel Enterprise Edition for Lustre is a high-performance compute (HPC) storage solution that includes the HPE Apollo 4520 System and Intel® Enterprise Edition for Lustre*. The Apollo 4520 is a dual-node system with up to 46 drives. Capacity can be increased by adding additional drives in a disk enclosure. The solution can scale by adding more systems in parallel to scale performance and capacity nearly linearly.

Scality RING running on HPE ProLiant servers provides a SDS solution for petabyte-scale data storage that is designed to interoperate in the modern SDDC. The RING software is designed to create a scale-out storage system, which is deployed as a distributed system on a minimum cluster of six storage servers.

Scality Ring Object storage software solutions are designed to run on industry-standard server platforms, offering lower infrastructure costs and scalability beyond the capacity points of typical file server storage subsystems. The HPE Apollo 4200 series servers provide a comprehensive and cost-effective set of storage building blocks for customers that wish to deploy an object storage software solution.

Vertica v9

Formerly, HPE Product, Vertica Version 9 delivers High-Performance In-Database Machine Learning and Advanced Analytics, Unified advanced analytics database features advancements in in-database Machine Learning.