Wednesday, December 13, 2017

Monday, December 11, 2017

7 Principles for DevOps Success

Success today in the App based economy, business success depends on DevOps. Business leaders must understand what it dates to build a mature, effective Continuous Development (CD) practice.

Here are 7 key principles business leaders must embrace and practice.

1. Production readiness

The fundamental principle behind CD is the ability to deliver a production-ready release on demand. The organization must reach a maturity level in which the application code is always in a production ready state. Production readiness does not necessarily mean that the application is deployed to production on a continuous basis, but rather the organization retains the ability to do so at will.

2. Uncompromised quality

Software quality cannot be compromised at any point and the development organization has to prioritize high quality over new features. Ensuring a consistent high quality requires

developer's responsibility and proper tooling. It demands tiers of comprehensive testing: Unit testing and static analysis before build and automated functional testing, load, and endurance testing with proper runtime diagnostics tools in place. Quality failures abort the build process until resolution.

3. Repeatable delivery

The entire software delivery process from build through staging to production must be reliably repeatable so that each iteration is performed in an identical manner. This is achieved by adopting IT tasks automation. Repeated manual tasks that are prone to errors and inconsistencies are also wasting expensive IT resources and time. Automation of these tasks is a prerequisite to any successful CD process.

4. Frequent build and integration

A CD environment operates with the notion that changes to the application code between build cycles are minimal. Agile, incremental development is practiced alongside CD to ensure that

the development project is broken into short iterations. Builds are triggered on all code checked-in to ensure that problems are isolated and addressed quickly.

5. Application stack consistency

The application stacks should be consistent and automatically provisioned to eliminate environment configuration inconsistencies. Consistency also accelerates the developer's and IT problem resolution capability as it reduces the failures related to application external dependencies.

6. Diagnostics and application management

High code quality requires problem detection and immediate resolution as defects occur. Fast and meaningful diagnostics data becomes critical to a successful CD implementation. Static analysis and dynamic analysis tools are sequentially deployed during the build cycle providing developers with the insight and drill down data. Lack of developer insight and diagnostics information allows defects to slip through and delay the ability to deliver a quality build.

7. Broad test automation coverage

Test automation is a prerequisite to ensure high quality and production readiness. Unit tests and multiple layers of automated functional tests are implemented to identify potential issues and regressions. Developers are required to develop unit tests for each submitted piece of code. Automated code quality and unit testing during the integration phase should cover at minimum 90 percent of the code.

Labels:

Agile,

DevOps,

Leadership

Product Management in Sustaining Products

Every product goes through its natural product lifecyle. After the initial release - ver 1.0, it rapidly goes through mass adaptation and then most often a product goes into a long period of sustenance - where there are few incremental features being added and new platform support being added to keep the product relevant to the marketplace.

Product management in this phase where the product is jut being sustained is just as important as its initial creation. This is the time where the product generates the highest profits. It is therefore very important for product managers to be very prudent and efficient in managing the product.

To be very successful in this life stage of the product, one needs to adapt management by metrics approach and here there are four main metrics:

1. Time to Market

2. Development Costs

3. Defect Rates

4. Support Costs

Figure-1: An illustration of main metrics

The main focus of product management here is to ensure that these metrics are heading in rapid downward trends. For example, the objective may be to reduce the defect rates by 73% (when compared to the baseline version - usually ver 3.0 or the version which defined the product)

These metrics then become the driving factors for new development - i.e., incremental development of the product.

At this stage of product lifecycle, it is very important to adapt agile practices: Agile product management, Agile product development and Agile leadership to lower the costs of sustaining the product in a very significant manner and increase the profits from the product.

Thus make the core cost centers as the key profit center!

Product management in this phase where the product is jut being sustained is just as important as its initial creation. This is the time where the product generates the highest profits. It is therefore very important for product managers to be very prudent and efficient in managing the product.

To be very successful in this life stage of the product, one needs to adapt management by metrics approach and here there are four main metrics:

1. Time to Market

2. Development Costs

3. Defect Rates

4. Support Costs

Figure-1: An illustration of main metrics

The main focus of product management here is to ensure that these metrics are heading in rapid downward trends. For example, the objective may be to reduce the defect rates by 73% (when compared to the baseline version - usually ver 3.0 or the version which defined the product)

These metrics then become the driving factors for new development - i.e., incremental development of the product.

At this stage of product lifecycle, it is very important to adapt agile practices: Agile product management, Agile product development and Agile leadership to lower the costs of sustaining the product in a very significant manner and increase the profits from the product.

Thus make the core cost centers as the key profit center!

Labels:

Agile,

Leadership,

Product Management

Thursday, December 07, 2017

Ten Things One Should know about DevOps

DevOps has taken the IT world by storm over the last few years and continues to transform the way organizations develop, deploy, monitor, and maintain applications, as well as modifying the underlying infrastructure. DevOps has quickly evolved from a niche concept to a business imperative and companies of all sizes should be striving to incorporate DevOps tools and principles.

The value of successful DevOps is quantifiable. According to the 2015 State of DevOps Report, organizations that effectively adopt DevOps deploy software 30 times more frequently and with 200 times shorter lead times than competing organizations that have yet to embrace DevOps.

They also have 60 times fewer failures, and recover from those failures 168 timesfaster. Those are impressive numbers and define why succeeding at DevOps is so important for organizations to remain competitive today.

As the DevOps revolution continues, though, many enterprises are still watching curiously from the sidelines trying to understand what it's all about. Some have jumped in, yet are struggling to succeed. But one thing's certain — it's a much greater challenge to succeed at DevOps if your CIO doesn't grasp what it is or how to adopt it effectively.

Labels:

Agile,

analytics,

DevOps,

Digitalisation,

Digitalization

Tuesday, December 05, 2017

Thursday, November 30, 2017

Wednesday, November 29, 2017

Software Architecture for Cloud Native Apps

Microservices are a type of software architecture where large applications are made up of small, self-contained units working together through APIs that are not dependent on a specific language. Each service has a limited scope, concentrates on a particular task and is highly independent. This setup allows IT managers and developers to build systems in a modular way.

Microservices are small, focused components built to do a single thing very well.

Componentization:

Microservices are independent units that are easily replaced or upgraded. The units use services to communicate with things like remote procedure or web service requests.

Business capabilities:

Legacy application development often splits teams into areas like the "server-side team" and the "database team." Microservices development is built around business capability, with responsibility for a complete stack of functions such as UX and project management.

Products rather than projects:

Instead of focusing on a software project that is delivered following completion, microservices treat applications as products of which they take ownership. They establish an ongoing dialogue with a goal of continually matching the app to the business function.

Dumb pipes, smart endpoints:

Microservice applications contain their own logic. Resources that are often used are cached easily.Decentralized governance: Tools are built and shared to handle similar problems on other teams.

Problems microservices solve

Larger organizations run into problems when monolithic architectures cannot be scaled, upgraded or maintained easily as they grow over time.

Microservices architecture is an answer to that problem. It is a software architecture where complex tasks are broken down into small processes that operate independently and communicate through language-agnostic APIs.

Monolithic applications are made up of a user interface on the client, an application on the server, and a database. The application processes HTTP requests, gets information from the database, and sends it to the browser. Microservices handle HTTP request response with APIs and messaging. They respond with JSON/XML or HTML sent to the presentation components.

Microservices proponents rebel against enforced standards of architecture groups in large organizations but enthusiastically engage with open formats like HTTP, ATOM and others.As applications get bigger, intricate dependencies and connections grow. Whether youare talking about monolithic architecture or smaller units, microservices let you splitthings up into components. This allows horizontal scaling, which makes it much easier tomanage and maintain separate components.The relationship of microservices to DevOpsIncorporating new technology is just part of the challenge. Perhaps a greater obstacle is developing a new culture that encourages risk-taking and taking responsibility for an entire project "from cradle to crypt."

Developers used to legacy systems may experience culture shock when they are given more autonomy than ever before. Communicating clear expectations for accountability and performance of each team member is vital. DevOps is critical in determining where and when microservices should be utilized. It is an important decision because trying to combine microservices with bloated, monolithic legacy systems may not always work. Changes cannot be made fast enough. With microservices, services are continually being developed and refined on-the-fly.

DevOps must ensure updated components are put into production, working closely with internal stakeholders and suppliers to incorporate updates. Microservices are an easier solution than SOA, much like JSON was considered to be simpler than XML and people viewed REST as simpler than SOAP.

With Microservices, we are moving toward systems that are easier to build, deploy and understand.

Managing Hybrid IT with HPE OneSphere

HPE OneSphere simplifies multi-cloud management for enterprises. With HPE OneSphere:

1. One can

deliver everything “as-a-service”

a. Present all resources as ready-to-deploy

services

b. Build VM Farm that spans across private and

public clouds

c. Dynamically scale resources

d. Lower Opex

2.

Control IT spend and utilization of public cloud services

a. Mange subscription based consumption

b. Optimize app placement using

insights/reports

c. Get visibility into cross-cloud resource

utilization & costs

3.

Respond faster by enabling fast app deployment

a. Provide a quota based project work spaces

b. Provide self-service access to curated

tools, resources & templates

c. Streamline DevOps process

Labels:

Cloud computing,

HPE,

Hybrid IT,

OneSphere

Tuesday, November 28, 2017

The Digital Workplace

Today's digital workforce demands a secure, high-speed Wi-Fi connectivity. Pervasive wireless access to business-critical applications is now expected wherever users work. Wireless LANs (WLANs) need massive scalability, uncompromising security, and rock solid reliability to accommodate the soaring demand.

Embracing a mobile first digital workplace

Designing and building a high-performance workplace needs a wireless network, and the applications that run on them, is where services from Hewlett Packard Enterprise (HPE) excel.

With Aruba wireless technology can deliver a mobile first workplace which connects to Microsoft Skype for Business and Office 365, making the transition to a digital workplace a seamless process.

The digital workplace enables people to bring your own (BYO)-everything with pervasive wireless connectivity, security, and reliability. This enables IT to focus on automation and centralized management. The mobile first workplace will be simpler to manage and maintain.

Benefits include:

• Higher productivity with fast, secure, and always-on 802.11ac Wi-Fi connectivity

• Lower operating expenditures (OPEX) through reduced reliance on cellular networks

• Better user experiences

• Reduce infrastructure cost in an all wireless workplace by 34%

• Increase business productivity

• Reduce hours spent on-boarding and performing adds, moves, and changes

Labels:

Digitalisation,

Digitalization,

Internet-of-Things

HPE Elastic Platform for Big Data Analytics

Big data analytics platform has to be elastic - i.e., scale out with additional servers as needed.

In my previous post, I had given the software architecture for Big Data analytics. This article is all about the hardware infrastructure needed to deploy it.

HPE Apollo 4510 offers scalable dense storage system for your Big Data, object storage or data analytics? The HPE Apollo 4510 Gen10 System offers revolutionary storage density in a 4U form factor. Fitting in HPE standard 1075 mm rack, with one of the highest storage capacities in any 4U server with standard server depth. When you are running Big Data solutions, such as object storage, data analytics, content delivery, or other data-intensive workloads, the HPE Apollo 4510 Gen10 System allows you to save valuable data center space. Its unique, density-optimized 4U form factor holds up to 60 large form factor (LFF) and additional 2 small form factor (SFF) or M.2 drives. For configurability, the drives can be NVMe, SAS, or SATA disk drives or solid state drives.

HPE ProLiant DL560 Gen10 Server is a high-density, 4P server with high-performance, scalability, and reliability, in a 2U chassis. Supporting the Intel® Xeon® Scalable processors with up to a 68% performance gain1, the HPE ProLiant DL560 Gen10 Server offers greater processing power, up to 3 TB of faster memory, I/O of up to eight PCIe 3.0 slots, plus the intelligence and simplicity of automated management with HPE OneView and HPE iLO 5. The HPE ProLiant DL560 Gen10 Server is the ideal server for Bigdata Analytics workloads: YARN Apps, Spark SQL, Stream, Mlib, Graph, NoSQL, kafka, sqoop, flume etc., database, business processing, and data-intensive applications where data center space and the right performance are of paramount importance.

The main benefits of this platform are:

- Flexibility to scaleScale compute and storage independently

- Cluster consolidationMultiple big data environments can directly access a shared pool of data

- Maximum elasticityRapidly provision compute without affecting storage

- Breakthrough economicsSignificantly better density, cost and power through workload optimized components

Labels:

BigData,

Data Center,

DataLake,

Lusture File System,

Object Store,

SDS

Big Data Warehouse Reference Architecture

Data needs to get into Hadoop in some way and needs to be securely accessed through different tools. There is a massive choice of tools for each component, dependent on the choice of Hadoop distribution selected – each having their own versions of the tools, but, nonetheless providing the same functionality.

Just like core Hadoop, the tools that ingest and access data in Hadoop can scale independently. For example, a Spark cluster, flume cluster, or kafka cluster

Each specific tool has it's own infrastructure requirements.

For example: Spark requires more memory and processor power, but is less dependent on hard disk drives

Hbase doesn't require as many cores within the processor but requires more servers and faster non-volatile memory such as SSD and NVMe based flash.

Labels:

BigData,

Information Security

Wednesday, November 22, 2017

Why Use Lusture File System?

Lusture File System is designed for a large-scale, high-performance data storage system. Lusture was designed for High Performance Computing requirements – which scales linearly to meet the most stringent and highly demanding requirements of Media applications.

Lustre file systems have high performance capabilities and open source licensing, it is often used in supercomputers. Since June 2005, it has consistently been used by at least half of the top ten, & more than 60 of the top 100 fastest supercomputers in the world, including the world's No. 2 and No. 3 ranked TOP500 supercomputers in 2014,Titan & Sequoia.

Lustre file systems are scalable and can be part of multiple computer clusters with tens of thousands of client nodes, hundreds of petabytes (PB) of storage on thousands of servers, and more than a terabyte per second (TB/s) of aggregate I/O throughput. This makes Lustre file systems a popular choice for businesses with large data centers, including those in industries such as Media Service, Finance, Research, Life sciences, and Oil & Gas.

Why Object Store is ideal data storage for Media Apps?

We are seeing a tremendous explosion of media content on Internet. Today, its not just YouTube for video distribution, there are million mobile apps which dostribute media - Audio & Video content over Internet.

Users today expect on-demand audio/video, anywhere, anytime access from any device. This increases the number of transcoded copies - to accommodate devices with various screen sizes.

Companies are now using Video and Audio as major means of distributing information in their websites. This media content is cataloged online and is always available for users.

Even the content creation is adding new challenges to data storage. The advent of new audio & video technologies is making raw content capture much larger: 3D, 4K/8K, High Dynamic Range, High Frame Rates (120 fps, 240fps), Virtual and Augmented Reality, etc.

Content creation workflow has changed from file-based workflows to cloud-based workflows for production, post-production processing such as digital effects, rendering, or transcoding, as well as distribution and archiving. This has created a need for real-time collaboration need for distributed environments and teams scattered all over the globe, across many locations and time zones

All this changes in how media is created and consumed has resulted in such massive dataset sizes, traditional storage architectures just can't keep up any longer in terms of scalability.

Traditional storage array technolofies such as RAID will no longer capable of serving the new data demands. For instance, routine RAID rebuilds would be taking way too long in case of a failure, heightening data loss risks upon additional failures during that dangerously longer time window. Furthermore, even if current storage architectures could technically keep up, they are cost-prohibitive, especially considering the impending data growth tsunami about to hit. To top it off, they just can't offer the agility, efficiency and flexibility new business models have come to expect in terms of instant and unfettered access, rock-solid availability, capacity elasticity, deployment time and so on.

Facing such daunting challenges, the good news is that a solution does exist and is here today: Object Storage.

Object Storage is a based on sophisticated storage software algorithms running on a distributed, interconnected cluster of high-performance yet standard commodity hardware nodes, delivering an architected solution suitable for the stringent performance, scalability, and cost savings requirements required for massive data footprints. The technology has been around for some time but is now coming of age.

The Media and Entertainment industry is well aware of the benefits Object Storage provides, which is why many players are moving toward object storage and away from traditional file system storage. These benefits include:

- Virtually unlimited scalability

Scale out by adding new server node - Low cost with leverage of commodity hardware

- Flat and global namespace, with no locking or volume semantics

- Powerful embedded metadata capabilities (native as well as user-defined)

- Simple and low-overhead RESTful API for ubiquitous, straightforward access over HTTP from any client anywhere

- Self-healing capabilities with sophisticated and efficient data protection through erasure coding (local or geo-dispersed)

- Multi-tenant management and data access capabilities (ideal for service providers)

- Reduced complexity (of initial deployment/staging as well as ongoing data management)

- No forklift upgrades, and no need for labor-intensive data migration projects

- Software-defined storage flexibility and management

HPE. A leading sellers of servers and Hyperconverged Systems offers several low cost, high performance solutions for Object Storage on its servers using Software Defined Storage solutions:

1. Object Store with Scality Ring

2. Lusture File System

Scality Ring Object Store is a paid SDS offering from Scality Inc which is ideal for enterprise customers.

The Lustre file system is an open-source, parallel file system that supports many requirements of leadership class HPC simulation environments. Born from from a research project at Carnegie Mellon University, the Lustre file system has grown into a file system supporting some of the Earth's most powerful supercomputers. The Lustre file system provides a POSIX compliant file system interface, can scale to thousands of clients, petabytes of storage and hundreds of gigabytes per second of I/O bandwidth. The key components of the Lustre file system are the Metadata Servers (MDS), the Metadata Targets (MDT), Object Storage Servers (OSS), Object Server Targets (OST) and the Lustre clients.

In short, Lusture is ideal for large scale storage needs of service providers and large enterprises.

Thursday, November 16, 2017

Why Use Containers for Microservices?

Microservices deliver three benefits: speed to market, scalability, and flexibility.

Speed to Market

Microservices are small, modular pieces of software. They are built independently. As such, development teams can deliver code to market faster. Engineers iterate on features, and incrementally deliver functionality to production via an automated continuous delivery pipeline.

Scalability

At web-scale, it's common to have hundreds or thousands of microservices running in production. Each service can be scaled independently, offering tremendous flexibility. For example, let's say you are running IT for an insurance firm. You may scale enrollment microservices during a month-long open enrollment period. Similarly, you may scale member inquiry microservices at a different time E.g., during the first week of the coverage year, as you anticipate higher call volumes from subscribed members. This type of scalability is very appealing, as it directly helps a business boost revenue and support a growing customer base.

Flexibility

With microservices, developers can make simple changes easily. They no longer have to wrestle with millions of lines of code. Microservices are smaller in scale. And because microservices interact via APIs, developers can choose the right tool (programming language, data store, and so on) for improving a service.

Consider a developer updating a security authorization microservice. The dev can choose to host the authorization data in a document store. This option offers more flexibility in adding and removing authorizations than a relational database. If another developer wants to implement an enrollment service, they can choose a relational database its backing store. New open-source options appear daily. With microservices, developers are free to use new tech as they see fit.

Each service is small, independent, and follows a contract. This means development teams can choose to rewrite any given service, without affecting the other services, or requiring a full-fledged deployment of all services.

This is incredibly valuable in an era of fast-moving business requirements.

Labels:

Cloud computing,

Containers,

DevOps,

FIntech,

Microserivices

Monday, November 13, 2017

What is Flocker

Flocker is an open-source Container Data Volume Manager for your Dockerized applications. By providing tools for data migrations, Flocker gives ops teams the tools they need to run containerized stateful services like databases in production. Flocker manages Docker containers and data volumes together.

Labels:

Containers,

OpenStack,

Storage Virtulization

Sunday, November 12, 2017

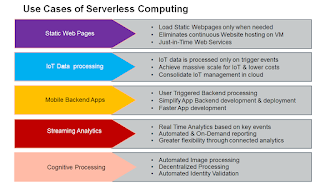

Serverless Computing for Microservices

Microservices is a new architecture of developing software. Microservices is best defined as:

"Service Oriented Architecture composed of loosely coupled components that have clearly defined boundaries"

This can be interpreted a set of software functions that work together based on predefined rules for example take a restaurant website. A typical restaurant website does not have high traffic all through the day, and traffic increases during lunch & dinner time. So having this website on a dedicated VM is a waste of resources. Also the website can be broked down into few distinct functions. The main webpage would be the landing zone, and from there each section like Photos, Menu, Location, etc., could be another independent function. The user triggers these funtions by clicking on the hyperlinks - and users will be served with the requested data.

This implies no coupling or loosely coupled functions that make up the entire website and each funtion can be modified/updated independently. This implies, the business owner can independently - without the need to bringdown the entire website.

From cost prespective also, building a website with Function-as-a-Service - allows the business to pay for the actual usage and each segment of the site can scale independently.

Labels:

Innovation,

MicroApps,

Microserivices,

Serverless computing

Thursday, November 09, 2017

Containers Vs Serverless Functions

Today, Containers and Functions are "very hot" among the developer community. If you are using Fission, Serverless Functions run inside a container and this creates some confusion among young developers and students. As an expert in latest computing architectures and solution, I got too many questions on what exactly is the difference between the two?

In this post, I have posted the key differences between the two and the differences are not very clear cut in some aspects such as scaling and management, but at least here are some major differences and hope this gives you a better understanding of how containers and Serverless functions work.

Labels:

Cloud computing,

Containers,

Serverless computing

Wednesday, November 08, 2017

VMware VIC on HPE Proliant Gen 10 DL 360 DL 560 Servers

VMware's vSphere Integrated Containers (VIC) enables IT teams to seamlessly run traditional and container workloads side-by-side on existing vSphere infrastructure.

vSphere Integrated Containers comprises three major components:

- vSphere Integrated Containers Engine: A container runtime for vSphere that allows you to provision containers as virtual machines, offering the same security and functionality of virtual machines in VMware ESXi™ hosts or vCenter Server® instances.

- vSphere Integrated Containers Registry:

An enterprise-class container registry server that stores and distributes container images. vSphere Integrated Containers Registry extends the Docker Distribution open source project by adding the functionalities that an enterprise requires, such as security, identity and management. - vSphere Integrated Containers Management Portal:

A container management portal that provides a UI for DevOps teams to provision and manage containers, including the ability to obtain statistics and information about container instances. Cloud administrators can manage container hosts and apply governance to their usage, including capacity quotas and approval workflows.

Labels:

Containers,

Storage Virtulization,

VMware VIC

Monday, November 06, 2017

Run Big Data Apps on Containers with Mesosphere

Apache Mesos was created in 2009 at UC Berkeley. Designed to run large scale webapps like Twitter, Uber, etc. It can scale upto 10,000s of nodes and supports Docker Containers.

Mesos is a distributed OS kernel:

- Two level resource scheduling

- Launch tasks across the cluster

- Communication between tasks (like IPC)

- APIs for building “native” applications (aka frameworks): program against the datacenter

- APIs in C++, Python, JVM-languages, Go and counting

- Pluggable CPU, memory, IO isolation

- Multi-tenant workloads

- Failure detection & Easy failover and HA

Mesos is a multi-framework platform solution: weighted fair sharing, roles, etc. Runs Docker containers alongside other popular frameworks e.g. Spark, Rails, Hadoop, Allows users to run regular services and batch apps in the same cluster. Mesos has advanced scheduling: resources, constraints, global view of resources, which is designed for HA and self-healing.

Mesos is now a proven at scale, battle-tested in production running the biggest of the web apps.

Labels:

BigData,

CloudFourm,

Containers,

Data Center,

Innovation,

Internet-of-Things,

Microserivices

Thursday, November 02, 2017

Corda is not a Blockchain

On November 30th, 2016 the R3 foundation publicly released the code for its Corda decentralized ledger platform along with a bevy of developer tools, repositories, and community features including both a Slack and a Forum. A little under a month out, and it is safe to say that the Corda platform is well underway under the guidance of the well known Mike Hearn who also wrote the technical whitepaper on Corda.

Notably, in this white paper and in the code, the development team has taken a new approach to decentralized ledgers: Corda is not a blockchain. Many aspects of Corda resembles something in blockchain, Corda is not a block chain. Transaction races are deconflicted using pluggable notaries. A single Corda network may contain multiple notaries that provide their guarantees using a variety of different algorithms. Thus Corda is not tied to any particular consensus algorithm.

This is a fascinating addition to the distributed/decentralized ledger race in that one of the most well known consortia in the blockchain space has moved away from using blocks of transactions linked together. This is an intriguing peer-2-peer architecture since the transactions utilize the UTXO input/output model which is very similar to the transaction system used in more traditional blockchains such as Bitcoin but the storage and verification do not get written into blocks.

Likewise, Corda does not contain a general gossip protocol which broadcasts all transactions to the network. The validation function of the contract code only needs the validation chain of each individual transaction that it is working with and transactions that occur on the ledger are not broadcast to a public depository or written into blocks. Likewise, the consensus protocol of each deployment of Corda can change allowing the platform to conform to the needs & specifications of each client. These simplifications allow Corda to sidestep the scalability issues dogging blockchains like Bitcoin while allowing for a system that conforms to the needs of an enterprise rather than forcing a multi-gajillion dollar company to fundamentally change the way they need to handle payments.

Corda architecture is a highly client-sensitive private ledger that allows for nodes tailored to the kinds of transactions that their operators need. The ledger allows for mistakes to be fixed and states to be edited and is stored on a H2 database engine interfaced with the SQL relational database language. However, any changes to states must also conform and be validated by the code. This realist approach to an enterprise distributed ledger as it takes into account the need for both familiar integration, headroom for the inevitable human mistake, and a single truth between parties. As mentioned before, the state system also contains a direct reference to an actual legal document that governs this truth.

Labels:

bitcoin,

blockchain,

Corda,

R3 Corda

Wednesday, November 01, 2017

Network Architecture for Ceph Storage

As data continues to grow exponentially

storing today’s data volumes in an efficient way is a challenge. Traditional

storage solutions neither scale-out nor make it feasible from Capex and Opex

perspective, to deploy Peta-Byte or Exa-Byte data stores. OpenStack Ceph Storage is a

novel approach to manage present day data volumes and provide users with

reasonable access time at a manageable cost.

Ceph is a massively scalable, open source,

software-defined storage solution, which uniquely provides object, block and

file system services with a single, unified Ceph Storage Cluster.

Ceph

uniquely delivers object, block, and file storage in one unified system. Ceph is

highly reliable, easy to manage, and open-source. The power of Ceph can

transform your company’s IT infrastructure and your ability to manage vast

amounts of data. Ceph

delivers extraordinary scalability –thousands of clients accessing petabytes or

even Exa-bytes

of data. A Ceph Node

leverages commodity hardware and intelligent daemons, and a Ceph

Storage Cluster accommodates large numbers of nodes, which communicate with

each other to replicate and redistribute data dynamically. A Ceph

Monitor can also be placed into a cluster of Ceph monitors to oversee the Ceph

nodes in the Ceph

Storage Cluster, thereby ensuring high availability.

This architecture is a cost effective

solution based on HPE Synergy Platform which can scale out to meet multi Peta Byte

scale.

Labels:

Ceph,

Cloud computing,

DevOps,

OpenStack,

Synergy

Tuesday, October 31, 2017

HPE Synergy Advantage for Software Defined Storage

Cloud Platforms requires massively scalable, programmable storage platform that supports cloud infrastructure, media repositories, backup and restore systems, and data lakes. HPE Syergy is an ideal plaform for such demands that provide a cost effective SDS storage solution; and introduce cost-effective scalability that modern workloads require.

HPE Synergy 12000 Frame forms an excellent platform for high performance SDS storage that scales to meet business demand. The Frame is the base infrastructure that pools resources of compute, storage, fabric, cooling, power and scalability. IT can manage, assemble and scale resources on demand by using the Synergy Frame with an embedded management solution combining the Synergy Composer and Frame Link Modules.

The Synergy Frame is designed to meet

today’s needs and future needs with continuing enhancements to compute and

fabric bandwidths, including photonics-ready capabilities. Enhancements to the

HPE Synergy 12000 Frame include several new capabilities:

• Support for HPE Synergy 480 and 660

Gen10 Compute Modules.

• Additional 2650W hot plug power supply

options: HVDC, 277VAC, and -48VDC.

• Product version to comply with the

Trade Agreements Act (TAA).

HPE Synergy is a single infrastructure of

physical and virtual pools of compute, storage, and fabric resources, and a

single management interface that allows IT to instantly assemble and

re-assemble resources in any configuration. As the foundation for new and

traditional styles of business infrastructure, HPE Synergy eliminates hardware

and operational complexity so IT can deliver infrastructure to applications

faster and with greater precision and flexibility.

D3940 Storage Module provides a fluid pool of storage resources for the Composable

Infrastructure. Additional capacity for compute modules is easily provisioned

and intelligently managed with integrated data services for availability and

protection. The 40 SFF drive bays per storage module can be populated with 12 G

SAS or 6 G SATA drives.

Expand up to 4 storage modules in a single Synergy

12000 Frame for a total of 200 drives. Any drive bay can be zoned to any

compute module for efficient use of capacity without fixed ratios.

A second HPE

Synergy D3940 I/O Adapter provides a redundant path to disks inside the storage

module for high data availability.

The HPE Synergy D3940 Storage Module and HPE

Synergy 12Gb SAS Connection Module are performance optimized in a non-blocking

12 Gb/s SAS fabric.

Labels:

HPE,

SDS,

Storage Spaces Direct,

Storage Virtulization,

Synergy,

VSA,

vSAN

Use Cases of Ceph Block & Ceph Object Storage

OpenStack Ceph was designed to run on general purpose server hardware. Ceph supports elastic provisioning, which makes building and maintaining petabyte-to-exabyte scale data clusters economically feasible.

Many mass storage systems are great at storage, but they run out of throughput or IOPS well before they run out of capacity—making them unsuitable for some cloud computing applications. Ceph scales performance and capacity independently, which enables Ceph to support deployments optimized for a particular use case.

Here, I have listed the most common use cases for Ceph Block & Object Storage.

Labels:

Ceph,

Data Center,

DataLake,

DevOps,

KVM,

OpenStack,

Storage Virtulization

Friday, October 27, 2017

Unified Storage with OpenStack Ceph

Open Stack Ceph Storage is a massively scalable, programmable storage platform that supports cloud infrastructure, media repositories, backup and restore systems, and data lakes. It can free you from the expensive lock of proprietary, hardware-based storage solutions; consolidate labor and storage costs into a single versatile solution; and introduce cost-effective scalability that modern workloads require.

OpenStack Cloud platforms is now becoming popular for enterprise computing. Enterprise customers are embracing OpenStack Cloud to lower their costs: License costs and eliminates the need for dedicated storage systems. From an enterprise workload perspective, there are multiple storage requirements:

1.

High performance Block

Storage

2.

High

throughput File Storage

3. Cost Effective Object Storage

In OpenStack, Ceph storage provides a unified storage interface for Block, File & Object Storage. This plus the ability to scale out to 1000’s of nodes makes Ceph storage a preferred SDS solution when implementing OpenStack Cloud Platform.

When

OpenStack Solution is built on Scaleout

servers like HPE Apollo platform which provides highest storage & compute

density per rack, the cost economics of an on premise solution beats a public

cloud offerings.

OpenStack Ceph Storage is designed to meet the following requirements:

1.

High availability

2.

Security

3.

Cost Effective

4.

Scalability

5.

Configurable

6.

Performance

7.

Usability

8.

Measurable

Labels:

Ceph,

OpenStack,

Storage Virtulization

Thursday, October 26, 2017

Bitcoin is not Anonymous

One of the commin misconception about Bitcoin is its supposed anonymity. In reality, it is not so. Though all users of Bitcoin take up a pseudonym which is their public key and this public key does not reveal the name or identity of the user. By using pseudonym, users tend to think that Bitcoin is anonymous - but it is not so. Let me explain below:

The pseudonym is now used for all transactions and all the transactions are recorded in a shared distributed ledger - i.e., everyone can see all transactions. This implies that all transaction data is available for any big data analytics.

By using transaction data with data from person's mobile: Locational data, Social network data, internet access data etc, it is possible to triangulate the pseudonym to the identity of a real person. With rapid advances in realtime bigdata analytics, it is now possible to link a bitcoin address to a real-world identity.

If one interacts with a bitcoin business: Online wallet service, Exchange, or a merchant - who usually want your real-world identity for tansactions with them. For example details like credit card information or shipping address; Coffee shop or resturants that accept bitcoins - then the buyer must be physically present in the store. The store clerk/attender know about the buyer - thus the pseudonym now gets associated with the real-world identity. The same information can be gleamed from digital trail in form of data from cell phone, CCTV grabs etc,. Information like geographic location, time of the day, social network postings (Twitter, Facebook etc) can all be collected remotely, analyzed and soon a pseudonym can be associated with a real-world identity.

Labels:

analytics,

BigData,

bitcoin,

blockchain,

Information Security

Wednesday, October 25, 2017

Tuesday, October 24, 2017

Tuesday, October 17, 2017

Serverless Computing on Common IT Infrastructure

Customers want flexible deployment options. In this configuration, IT can offer hosting traditional apps – running on VM. In addition, Container based workloads can be deployed on the same underlying infrastructure. The Openstack orchestration frameworks like Kubernetes and Cloudfourms allows great level of flexibility.

On top of Kubernetes cluster, one can also deploy Fission – to create a serverless IT servers to support new IoT & Mobile apps based on-demand services, while retaining a single plane of glass for management APIs – while providing unified multi-tenancy mechanism.

This unified platform provides IT to offer all three application deployment options: Traditional business applications running on VMs; Container Apps and Serverless Computing platform.

Customers want flexible deployment options. In this configuration, IT can offer hosting traditional apps – running on VM. In addition, Container based workloads can be deployed on the same underlying infrastructure. The Openstack orchestration frameworks like Kubernetes and Cloudfourms allows great level of flexibility.

On top of Kubernetes cluster, one can also deploy Fission – to create a serverless IT servers to support new IoT & Mobile apps based on-demand services, while retaining a single plane of glass for management APIs – while providing unified multi-tenancy mechanism.

This unified platform provides IT to offer all three application deployment options: Traditional business applications running on VMs; Container Apps and Serverless Computing platform.

Open source Serverless framework - Fission uses Kurbernetes to manage the serverless functions: Creation, Termination and if needed, parallel invocation of apps. While it is a major technological breakthrough - it is also a very important financial innovation on how compute resources are being offered over the cloud.

Kubernetes event handlers is used to create custom automation in form of backend APIs or Webhooks. Kubernetes cluster manager will then manage the backend delivery based on demand.

I expect to see a significant disruption of cloud service offerings with this serverless computing.

In my next few upcoming blogs, I will write more about the software layers and architecture needed to offer serverless computing.

Labels:

CloudFourm,

Containers,

GlusterFS,

KVM,

OpenStack,

Serverless computing

Saturday, October 14, 2017

Friday, October 13, 2017

R3 Corda Application Architecture

Corda is a distributed ledger platform designed to record, manage and automate legal agreements between business partners. Designed by (and for) the world's largest financial institutions, it offers a unique response to the privacy and scalability challenges facing decentralised applications

Corda's development is led by R3, a Fintech company that heads a consortium of over 70 of the world's largest financial institutions in the establishment of an open, enterprise-grade, shared platform to record financial events and execute smart contract logic.

Corda is now supported by a growing open-source community of professional developers, architects and hobbyists.

What makes Corda different?

1. Engineered for business

Corda is the only distributed ledger platform designed by the world's largest financial institutions to manage legal agreements on an automatable and enforceable basis

2. Restricted data sharing

Corda only shares data with those with a need to view or validate it; there is no global broadcasting of data across the network

3. Easy integration

Corda is designed to make integration and interoperability easy: query the ledger with SQL, join to external databases, perform bulk imports, and code contracts in a range of modern, standard languages

4. Pluggable consensus

Corda is the only distributed ledger platform to support multiple consensus providers employing different algorithms on the same network, enabling compliance with local regulations

Closing Thoughts

Corda provides the opportunity to transform the economics of financial firms by implementing a new shared platform for the recording of financial events and processing of business logic: one where a single global logical ledger is authoritative for all agreements between firms recorded on it. This architecture will define a new shared platform for the industry, upon which incumbents, new entrants and third parties can compete to deliver innovative new products and services.

Labels:

blockchain,

FIntech,

R3 Corda

Thursday, October 12, 2017

Monday, July 24, 2017

Product Management 101 - Customer validation is the key

Recently, I was having lunch with a co-founder of a startup in Bangalore. They have a vision which sounds good on the surface: Provide data loss protection on Cloud. Though this sounds as such a old & proven idea, they have a very good secret sauce which gives them a unique value proposition: Security, Cost benefits & much better RPO/RTO than competition.

Like most entrepreneur, he started out by validating his product idea with customers. Starting with customer survey, asking customers about their pain points and asking them: "If this product solves your problem, will you buy it?"

Customer validation is a good point to start, but one must also be aware that such a survey can lead to several pitfalls.

- Customer needs could change with time, and are no longer interested when the product is launched.

- Customer just expressed his 'wants' and not his 'needs', and may not pay for the actual product.

- Customer has no stake in the product. Just answering few questions was easy - there was no commitment or risks.

All this risks imply that customer validation may result in false positives.

False positive is a known risk factor in new product development and startups often take such risks. In case of my friend's startup, he took that risk and decided to invest in developing a prototype.

Several months have gone by and his company is busy building the prototype and his biggest fear is that customers may not embrace his product and is constantly changing what should be his MVP - Minimum Viable Product.

What is a Minimum Viable Product?

A minimum viable product (MVP) is the most pared down version of a product that can still be released. An MVP has three key characteristics:

It has enough value that people are willing to use it or buy it initially.

It demonstrates enough future benefit to retain early adapters.

It provides a feedback loop to guide future development.

The idea of MVP is to ensure that one can develop a basic product which early adapters will buy, use & give valuable feedback that can help guide the next iteration of product development.

In other words, MVP is the first version of a customer validated product.

The MVP does not generate profits, it is just a starting point for subsequent product development - which in turn results in rapid growth and profits.

Customers who buy the MVP are the innovators & early adapters, and no company can be profitable serving just the early adapters. But a successful MVP opens the pathway towards the next iterations of the product which will be embraced by majority of customers: 'Early Majority', 'Late Majority' and 'Laggards'.

MVP is also expensive for startups

For a lean startup, developing a MVP can be expensive. MVP is based on: Build -> Measure -> Learn process - which is a waterfall model.

There are two ways to reduce risks associated with developing an MVP. One way to reduce risks is to avoid false positives.

While conducting market research during customer validation process, one must ensure that customer is invested in this product development.

At the first sight, it is not easy to get customer to invest in a new product development. Customers can invest their Time, Reputation &/or Money.

By getting customers to spend time on the potential solution to their problem is the first step.

Second step would be get them invest their reputation. Can customer refer someone else who also has the same problem/need? Is the customer willing to put his name down on the product as the Beta user? Getting customer invest their reputation would most often eliminate the risks of false positives.

One good way to get customers invest their reputation is to create a user group or community - where customers with similar needs can interact with each other and new product development team - while helping new product development.

In case of B2B products, customers can also invest money in new product development. Getting customers to invest money is not so tough. I have seen this happen in several occasions. I call this co-development with customers (see my blog on this topic)

Kick Starter programs have now taken hold and today startups are successfully using kick starter programs to get customers invest money in their new product development.

Accelerating the Development Cycle & Lowering Development Costs

A lean startup should avoid developing unwanted features.

Once customers are invested in this new product, the startup will usually start developing the product and march towards creating the MVP. However, it is common to develop a product and then notice that most customers do not use 50% of the features that are built!

Lean startup calls for lowering wastage by not building unused features. The best way to do this is to run short tests and experiments on simulation models. First build a simulation model and ask customers to use it and get their valuable feedback. Here we are still doing the Build -> Measure -> Learn process, but we are doing it on feature sets and not the entire product. This allows for a very agile product development process and minimizes waste.

Run this simulation model with multiple customers and create small experiments with the simulation model to get the best possible usage behavior from customers. These experimental models are also termed as Minimum Viable Experiment (MVE), which forms the blue print for the actual MVP!

Running such small experiments has several advantages:

- It ensures that potential customers are still invested in your new product.

- Helps develop features that are more valuable/rewarding than others.

- Build a differentiated product - which competes on how customers use the product, rather than having the most set of features.

- Helps learn how users engage with your product.

- Help create more bang for the buck!

Closing Thoughts

In this blog, I have described the basic & must-do steps in lean product development, which are the fundamental aspects of product management.

Customer validation is the key to new product success. However, running a basic validation with potential customers runs a big risks of false positives and investing too much money in developing the MVP.

Running a smart customer validation minimizes the risks while creating a lean startup or lean product development. A successful customer validation of a solution helps to get paying customers who are innovators or early adapters. This is first and most important step in any new product development - be it a lean startup or a well established company.

Subscribe to:

Posts (Atom)